A long gap since my last blog entry – for which I’m going to mostly blame Kscope…

I’ve mentioned before that I really enjoy the Unix tools and am working at becoming more competent with them. In this post, I’m going to explain why adding a new dimension to an ASO cube while retaining existing data is slightly harder than doing the same to a BSO cube, and then show how I used a very simple script (ksh, but these are such basic commands that I’m sure it can be translated easily if not used exactly as is) to modify native export files and solve the problem.

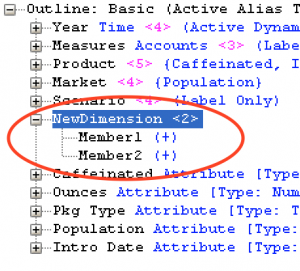

Suppose we want to add a new dimension to a BSO cube using the EAS outline editor. In this example, we’re adding a dimension named “NewDimension” to Sample.Basic:

On saving the outline, EAS helpfully offers to let us choose a member in the new dimension to which all existing data will be sent. Of course, if “real” history is available, it may make more sense to clear and reload the cube with an additional field. But that’s not always the case, and it’s nice to be given the option:

Now let’s try the same trick in ASO, here adding “NewDimension” to ASOsamp.Sample:

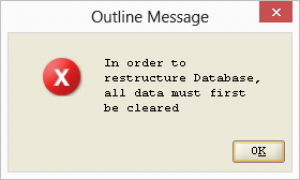

On saving the outline this time, EAS is not so helpful. The only available option is to clear all data:

But suppose we do want to keep the data, and load it all to one single member of the new dimension (say, [Member1]). One idea would be to export the data in column format, create a load rule and then use the header to force all data to [Member1]. But that won’t work, because ASO doesn’t permit columnar exports. The most efficient way of getting all our data out of an ASO cube is to create a native format export, which not only doesn’t require a load rule but is actually incompatible with the use of one.

Here’s the first few lines of a native export from ASOsamp.Sample taken before adding the new dimension; notice that the column structure is not consistent, which is both the reason why a native export can’t be used with a load rule and the reason why native exports are typically much more space-efficient than columnar ones:

"Original Price" "Price Paid" "Units" "Transactions" "Returns" "Curr Year" "Mar" "Sale" "Cash" "No Promotion" "1 to 13 Years" "Under 20,000" "Digital Cameras" "017589" "13628" 448 448 1 3 "Memory" "13738" 327 327 3 1 "13626" 206 206 2 2 "14036" 234 234 2 1 "13628" 220 220 2 1 "13762" 226 226 2 2 "13794" 360 360 3 1 "13674" 230 230 2 2 "Other Accessories" "14020" 169.5 169.5 3 2 ...

A native export always starts out with a single header line (see ‘Update’ below) that describes what is represented by each numeric value on all subsequent lines. So in this case, the first numeric value on any given line will always represent “Original Price”. But after that, the format gets hard to follow, because the secret to the native export’s small size is this: A member name is only written to the file when the member to which the following data applies changes. So in the example above we see that in line two the [Time] dimension member [Mar] and the [Products] dimension member [Digital Cameras] (among others) both appear. That means that the line two data is for ([Mar], [Digital Cameras]). On line three, while a [Products] dimension member [Memory] appears, no further [Time] dimension member is shown. All this means is that the line three data is still data for [Mar]. On line four and for several lines beyond, we don’t even see a [Products] member, meaning all that data (until [Other Accessories] appears on line ten) is for both [Mar] and [Memory].

While this structure makes the native export hard to read, it actually makes it very easy to modify given that our objective is simply to send all the data to a single member of a brand new dimension. We only need to ensure that the selected new dimension member’s name appear once in each export file, before any data values. The easiest way to do this is to insert a value between the first and second lines in the file (see ‘Update’ below). Since for real-world cubes there may be more than one export file created and the very large size (2GB) of the files makes use of a manual editor problematic, I wrote a shell script to do this:

for file in *.txt do head -1 "$file" > new_"$file" echo \"Member1\" >> new_"$file" tail -n +2 "$file" >> new_"$file" done

The script takes every .txt extension file in the current directory – I am assuming that the only text files in the current directory are the relevant set of export files – and creates a new version prefixed “new_”. The new version contains, in order:

- Line one from the original file – that line described above that contains the “header” list of data value fields

- An additional line containing only “Member1”

- Every subsequent line from the original file

So in our current example:

"Original Price" "Price Paid" "Units" "Transactions" "Returns" "Member1" "Curr Year" "Mar" "Sale" "Cash" "No Promotion" "1 to 13 Years" "Under 20,000" "Digital Cameras" "017589" "13628" 448 448 1 3 "Memory" "13738" 327 327 3 1 "13626" 206 206 2 2 "14036" 234 234 2 1 "13628" 220 220 2 1 "13762" 226 226 2 2 "13794" 360 360 3 1 "13674" 230 230 2 2 "Other Accessories" "14020" 169.5 169.5 3 2 ...

And that’s all there is to it! The new dimension can now be added to the outline (discarding all data) and the modified export files reloaded. Not quite as easy as for BSO, obviously, but not terribly difficult either once you know the trick.

Update July 26th 2016

Two observations:

First, in the comments below Kevin kindly shared his experience that it isn’t strictly necessary for the new dimension member to appear on the second line, i.e. after the ‘header’ line. Essbase will still interpret the file if the new dimension member appears before the header line. I was surprised by this, but it does seem to be the case in my testing. The export file will load successfully either way, but the script is even simpler if you add the new member on the first line (see Kevin’s comment).

Second, I have noticed that in some export files there is more than one header line, and the numeric values in one part of the file can actually belong to a different set of members than the numeric values in another. For example, I have seen export files that look (conceptually – this is a made-up example) like this:

"Original Price" "Price Paid" "Units" "Curr Year" "Mar" "Sale" "Cash" "No Promotion" "1 to 13 Years" "Under 20,000" "Digital Cameras" "017589" "13628" 448 448 1 3 "Memory" "13738" 327 327 3 "13626" 206 206 2 "14036" 234 234 2 "13628" 220 220 2 "Returns" "Price Paid" "Units" "Curr Year" "Mar" "Sale" "Cash" "No Promotion" "1 to 13 Years" "Under 20,000" "Digital Cameras" "017589" "13628" 448 448 1 3 "Memory" "13738" 327 327 3 "13762" 226 226 2 "13794" 360 360 3 "13674" 230 230 2 ...

This second observation makes no difference to the technique I describe as far as I can tell, but I’m making it here to correct my earlier, incorrect statement that there was always one single header line. My guess is that Essbase identifies a header line by seeing more than one member of the same dimension appear consecutively.

Hello,

good article.

I found out that you can also write the member to the first line before all other Data. There is no need to write it to line 2. Your script would then look like this.

for file in *.txt

do

echo \”Member1\” >> new_”$file”

tail -n +1 “$file” >> new_”$file”

done

Greets Kevin

Wow, thank you for the comment, Kevin.

That’s even simpler – I couldn’t quite believe it until I tested it myself. I’m really surprised, I guess that Essbase must know it’s found the header line when it sees consecutive members from the same dimension, rather than always having to see it as the first line.

Good to know (and makes this even simpler, as you say).

Best,

Tim

Informative. Thanks!

Good article. Thanks.

Thanks for the blog! You wrote: The easiest way to do this is to insert a value between the first and second lines in the file

The second easiest way is maybe to convert the native format file into a column format. This would give you also the possibility to filter, search and replace and do a lot of other things just using a rule file.

The conversion from native format to column format can be done with CubeSavvy Utilities. (There was a presentation at KSCOPE16… but with so many parallel sessions, one can not attend all.)

The utilities can be downloaded for free at http://www.cubesavvy.com. The utility you are looking for is called ASO Export Parser.

It is a great tip. Below is one liner in bash with a bit of explanation for the cryptic stuff

# one liner

# -i : infile replace , .bak : backup original file with .bak extension

# ‘ 1 i : insert before 1st line,

sed -i.bak ‘1 iMember1’ *.txt

Very Informative – I had never tried ASO until recent requirement. But I’m unable to import data back into ASO – The only difference is that I’m trying to do in PBCS. It is not even trying to read the file when i modify the export file.

On top of not being helpful, PBCS is not even giving helpful error message – it says “No such file or directory” – when it is even showing the export file (.text) name in error message, when i’m only giving it zip file name for import command.

Would be helpful if you have any tips in this case!

Thanks!!

I have recently been asked to enhance an ASO cube with an extra dimension. While BSO will show some performance loss due to extra blocks being created, how does adding extra dimension in ASO affect the performance?

Good question, John. It depends. There are several drivers of query performance in ASO, but I think the most important ones are the size of the input data and how well any aggregations are tuned.

If the extra dimension is related to another dimension (there are technical reasons why in ASO you might choose to model what is ‘logically’ an attribute dimension as a real dimension) then the number of input cells may not increase much. For example, suppose you added a ‘Brand’ dimension to a cube that already has a ‘Product’ dimension, and every Product has exactly one brand – the number of input cells is not going to increase at all. If, on the other hand, you add a ‘Day’ dimension to a cube that already has a ‘Period’ dimension, you might expect that to increase the number of input cells by up to a factor of ~30 (depending on how dense your data is). Adding dimensions may (or may not) also increase the key size, which will increase the overall input data size accordingly.

On the aggregation side, my experience is that tuning aggregate views gets harder the more dimensions you have to take into consideration, and if you have a fixed aggregation execution time, there will be more tradeoffs the more dimensions you add. Using the ‘cheat’ method in this blog post, there are initially no aggregations for the new dimension (or to put it another way, all aggregate views are calculated at level zero in the new dimension).

So overall – the best you can hope for is no noticeable impact. If the new dimension is queried heavily at upper levels, AND you previously depended on aggregate views for acceptable performance, you will probably need to tweak or add to your aggregate views. And if you are adding an ‘orthogonal’ dimension that explodes the number of input data cells, performance is almost certain to get worse.